Diffusion Language Model: The Rise of a New Paradigm for LLM? A Revolutionary Breakthrough That Overturns Autoregressive LLM

1 | Literature: |

The current situation and challenges in the LLM field

Large Language Models (LLM) have dominated natural language processing in recent years, represented by the DeepSeek series, OpenAI’s GPT series, and Anthropic’s Claude series. These models are mainly based on autoregressive architectures, generating text one by one, and the generation of each token depends on the preceding text. This sequential generation method is less efficient in long sequence generation, especially in real-time applications and complex inference tasks, with significant latency and computational cost bottlenecks.

Introduction of the diffusion model: from images to text

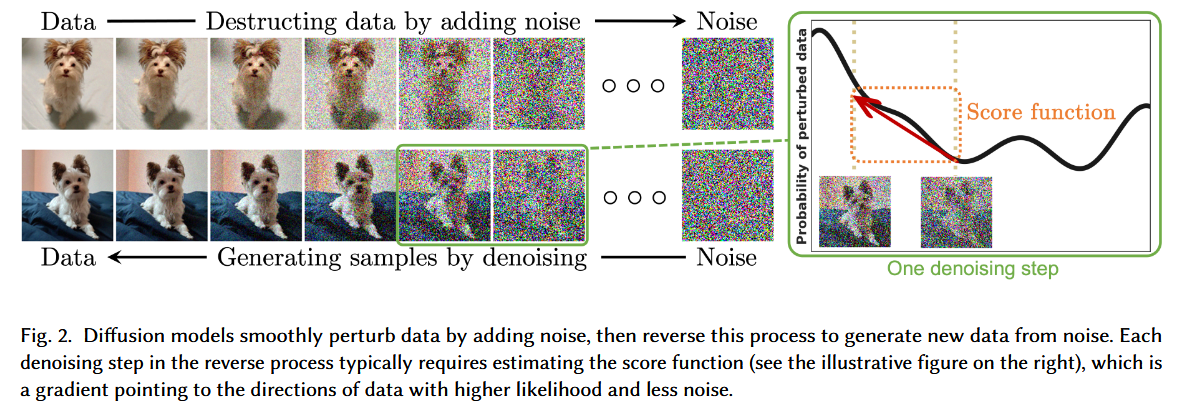

Diffusion models initially emerged in the field of image generation, such as Stable Diffusion and DALL-E, which generate high-quality images by gradually refining from random noise. The core idea is to learn a reverse process, gradually “denoising” from high-noise states to clear data.

For discrete data like text, the adaptation of diffusion model has become a research hotspot. Diffusion Language Model (dLLM) attempts to apply this technology to text generation, usually starting from masked or noisy text sequences, and generating the final result through multiple iterations.

MDLM: A breakthrough in research-based dLLM

MDLM (Masked Diffusion Language Models) is a research implementation of diffusion language models, proposed by researchers such as Subham Sekhar Sahoo, published in the 2024 NeurIPS conference “Simple and Effective Masked Diffusion Language Models”. Its core is a discrete diffusion model based on masking, using a compact Rao-Blackwellized target function without the need for complex CTMC theory.

- Training and Performance: MDLM uses modern engineering techniques, including key tokenization (such as avoiding the 8k small vocabulary of D3PM), numerically stable implementation, and uses Diffusion Transformer (DiT, Diffusion Transformers ) combined with rotational position embedding. When the training data size reaches 327B tokens, its perplexity (PPL) on the LM1B dataset is ≤ 23.00, close to the autoregressive Transformer’s 20.86 (20.86 for 327B tokens).

Here is the key performance comparison table:

- Generalization ability: MDLM outperforms SEDD on zero-sample perplexity, performs well on datasets such as PTB, Wikitext, LM1B, and sometimes even outperforms autoregressive models (such as Lambada and scientific paper datasets), thanks to its robustness based on the target function of solution masking.

The following is a comparison of zero-sample confusion:

- Related work: MDLM shows higher efficiency and performance than models such as D3PM ( Score-Based Generative Modeling through Stochastic Differential Equations ) and SEDD ( Sequence-to-Sequence Denoising Diffusion ).

Mercury: a milestone in monetization dLLM

On February 26, 2025, Inception Labs released the Mercury Coder, claiming to be the first dLLM at the scale of monetization. Inception Labs was founded by Stanford professor Stefano Ermon and others, whose team includes MDLM paper author Volodymyr Kuleshov, showing the close connection between research and monetization.

- Performance Highlights: Mercury Coder uses a coarse-to-fine generation method, which is different from the left-to-right sequential generation of autoregressive models. Its generation speed can reach 1000 tokens per second, which is 5-10 times faster than traditional LLM on NVIDIA H100 GPUs. Early benchmarks show that its quality can be comparable to GPT-4o Mini and Claude 3.5 Haiku, while costing less.

Application scenarios: Mercury supports API access and local deployment, suitable for real-time generation tasks such as AI agents, complex inference, and controllable generation. Inception Labs stated that dLLM can improve agent efficiency, reduce hallucinations, and support non-sequential token generation.

Technical Details: Mercury’s diffusion model starts with pure noise and generates text through several refining steps, reducing the multiple inference steps required for autoregressive models. Unlike traditional autoregressive models (such as the GPT series) that rely on left-to-right sequential generation, Mercury uses parallel processing, significantly reducing the inference steps required to generate complete text.

Advantages and Controversies of Diffusion Language Models

The parallel generation capability of dLLM significantly reduces inference latency and computational cost, which is particularly important for real-time applications such as chatbots and code generation. The research results of MDLM and Mercury’s monetization indicate that dLLM has potential in speed and efficiency, but the controversy lies in whether its long-term quality and robustness can continue to surpass autoregressive models.

Mercury demonstrates significant advantages in terms of speed and cost, claiming to be 10 times faster than existing models, especially suitable for high-concurrency scenarios, while effectively reducing enterprise deployment costs by reducing computing resource requirements. In addition, Mercury also introduces new capabilities to support non-sequential generation and error correction, which improves the inference ability and controllability of the model, bringing more possibilities for practical applications.

However, the generation quality of Mercury in practical applications is still controversial. Although it approaches the autoregressive model in perplexity index, its performance in coherence and context understanding still needs further verification. At the same time, it is not clear whether it is suitable for all tasks, such as long text generation and complex dialogue, which requires more research and practice to explore its applicability boundaries.

Future Outlook: The Possibility of a New Paradigm

The rise of diffusion language models may mark a new paradigm shift in the LLM field. Potential impacts include:

- Faster User Experience and Reduced Waiting Time.

- Lower operating costs, expanding the popularity of AI applications.

- New application scenarios, such as real-time MultiModal Machine Learning tasks (combining images and text).

However, current evidence still needs more practical application data support. Inception Labs’ Mercury is just the first step, and more dLLM products may enter the market in the future, and competition will drive technological progress.

Diffusion Language Model: The Rise of a New Paradigm for LLM? A Revolutionary Breakthrough That Overturns Autoregressive LLM